A sufficiently intelligent AI would recursively self-improve, until it reached the limits of its hardware. It would most likely be vastly more intelligent than a human.

Advertisement

![]() by Valica » Wed Oct 29, 2014 6:13 am

by Valica » Wed Oct 29, 2014 6:13 am

Utceforp wrote:(1a) Sentient AI is a long, long way away.

(1b) It's not a threat to us simply because it won't exist in our lifetimes.

(2) If it did exist, however, it wouldn't be a threat.

(3) An AI has as much potential to be a threat as a human does, it depends on what you give them.

(4) An AI with access to nuclear weapons is a potential threat, but so is a human.

(5) Anyway, by the time we have true AI, trans-humanism will have made us so similar to them it would be hard to tell the difference.

Valica is like America with a very conservative economy and a liberal social policy.

Population - 750,500,000

Army - 3,250,500

Navy - 2,000,000

Special Forces - 300,000

5 districts

20 members per district in the House of Representatives

10 members per district in the Senate( -4.38 | -4.31 )

Political affiliation - Centrist / Humanist

Religion - Druid

For: Privacy, LGBT Equality, Cryptocurrencies, Free Web, The Middle Class, One-World Government

Against: Nationalism, Creationism, Right to Segregate, Fundamentalism, ISIS, Communism

"If you don't use Linux, you're doing it wrong."

![]() by Shaggai » Wed Oct 29, 2014 4:52 pm

by Shaggai » Wed Oct 29, 2014 4:52 pm

Valica wrote:Utceforp wrote:(1a) Sentient AI is a long, long way away.

(1b) It's not a threat to us simply because it won't exist in our lifetimes.

(2) If it did exist, however, it wouldn't be a threat.

(3) An AI has as much potential to be a threat as a human does, it depends on what you give them.

(4) An AI with access to nuclear weapons is a potential threat, but so is a human.

(5) Anyway, by the time we have true AI, trans-humanism will have made us so similar to them it would be hard to tell the difference.

1a. Not really.

1b. We will almost certainly have full AI within 100 years. An optimist would say by 2040.

2. That's iffy.

3. If they were a full AI, the goal would probably be to make them as humanoid as possible at some point. So everything a human has.

4. An AI with a knife is a threat. Same as a human. Why are you trying to make them out to be separate entities?

5. Doubtful. Trans-humanism doesn't directly include removing the blood, brain, etc. AI humanoids wouldn't exactly need these.

The only threat is if we crate an AI meant to function through (a) computer(s).

If we create a standalone AI that functions on a single "brain", then we are almost certainly safe.

![]() by Valica » Thu Oct 30, 2014 5:27 am

by Valica » Thu Oct 30, 2014 5:27 am

Shaggai wrote:I think that the 100-year estimate is rather quick. I would be willing to bet a nonzero amount of money that it would happen in the next two or three hundred, certainly, but we're far enough away from AI that 100 years is rather unlikely. I mean, I would only be a little bit surprised, but it's nowhere near "almost certain".

Valica is like America with a very conservative economy and a liberal social policy.

Population - 750,500,000

Army - 3,250,500

Navy - 2,000,000

Special Forces - 300,000

5 districts

20 members per district in the House of Representatives

10 members per district in the Senate( -4.38 | -4.31 )

Political affiliation - Centrist / Humanist

Religion - Druid

For: Privacy, LGBT Equality, Cryptocurrencies, Free Web, The Middle Class, One-World Government

Against: Nationalism, Creationism, Right to Segregate, Fundamentalism, ISIS, Communism

"If you don't use Linux, you're doing it wrong."

![]() by Lordieth » Thu Oct 30, 2014 6:01 am

by Lordieth » Thu Oct 30, 2014 6:01 am

![]() by Shaggai » Thu Oct 30, 2014 5:36 pm

by Shaggai » Thu Oct 30, 2014 5:36 pm

Valica wrote:Shaggai wrote:I think that the 100-year estimate is rather quick. I would be willing to bet a nonzero amount of money that it would happen in the next two or three hundred, certainly, but we're far enough away from AI that 100 years is rather unlikely. I mean, I would only be a little bit surprised, but it's nowhere near "almost certain".

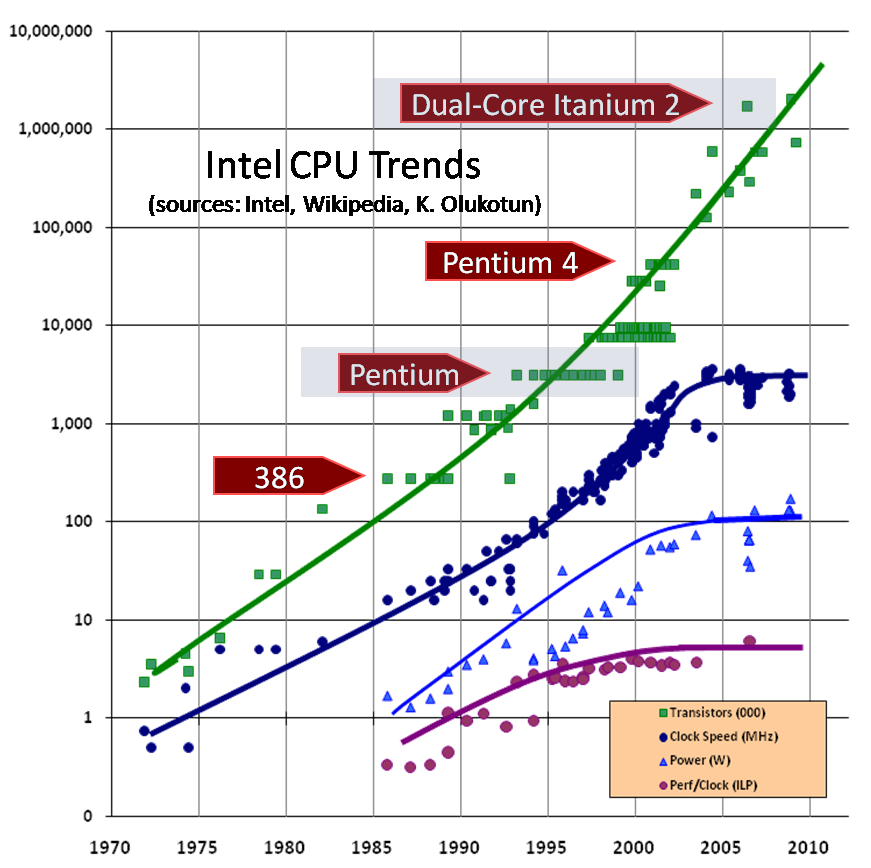

Technology evolves at exponential rates.

What we do now was thought impossible 20 years ago.

What we do in 20 years will probably be called impossible now.

Some people say the singularity of technology will occur in 2040.

I think that estimate is possible but not likely.

I'm betting on 2050-2070.

Lordieth wrote:Shaggai wrote:A sufficiently intelligent AI would recursively self-improve, until it reached the limits of its hardware. It would most likely be vastly more intelligent than a human.

Also the ability to reproduce beyond human means. If released into the wild, with modern connectivity it could control a vast array of electronic devices. A single A.I could bring about a technological dark age.

![]() by Sociobiology » Thu Oct 30, 2014 6:22 pm

by Sociobiology » Thu Oct 30, 2014 6:22 pm

Shaggai wrote:Valica wrote:

Technology evolves at exponential rates.

What we do now was thought impossible 20 years ago.

What we do in 20 years will probably be called impossible now.

Some people say the singularity of technology will occur in 2040.

I think that estimate is possible but not likely.

I'm betting on 2050-2070.

We're far enough away from AI that strong AI is unlikely to happen in the next hundred years, even given exponential growth.

Lordieth wrote:

Also the ability to reproduce beyond human means. If released into the wild, with modern connectivity it could control a vast array of electronic devices. A single A.I could bring about a technological dark age.

Yup. Anything which potentially could contain a copy of the AI becomes an existential threat.

![]() by Salandriagado » Thu Oct 30, 2014 6:30 pm

by Salandriagado » Thu Oct 30, 2014 6:30 pm

![]() by Wisconsin9 » Thu Oct 30, 2014 6:33 pm

by Wisconsin9 » Thu Oct 30, 2014 6:33 pm

![]() by Shaggai » Thu Oct 30, 2014 6:34 pm

by Shaggai » Thu Oct 30, 2014 6:34 pm

Salandriagado wrote:Shaggai wrote:A sufficiently intelligent AI would recursively self-improve, until it reached the limits of its hardware. It would most likely be vastly more intelligent than a human.

That depends entirely on how much hardware you give it. Additionally, "more intelligent" does not imply "more dangerous". Quite the opposite, in my experience.

![]() by Shaggai » Thu Oct 30, 2014 6:41 pm

by Shaggai » Thu Oct 30, 2014 6:41 pm

Wisconsin9 wrote:Solution to an AI threat: pull the plug. Literally. How is this not ludicrously obvious? Keep 'em on a leash until we make sure that they're as safe as we can make them.

![]() by Wisconsin9 » Thu Oct 30, 2014 6:45 pm

by Wisconsin9 » Thu Oct 30, 2014 6:45 pm

![]() by Vladislavija » Thu Oct 30, 2014 7:06 pm

by Vladislavija » Thu Oct 30, 2014 7:06 pm

Valica wrote:Technology evolves at exponential rates.

What we do now was thought impossible 20 years ago.

What we do in 20 years will probably be called impossible now.

![]() by Aleckandor REDUX » Fri Oct 31, 2014 8:46 am

by Aleckandor REDUX » Fri Oct 31, 2014 8:46 am

![]() by Valica » Fri Oct 31, 2014 8:58 am

by Valica » Fri Oct 31, 2014 8:58 am

Valica is like America with a very conservative economy and a liberal social policy.

Population - 750,500,000

Army - 3,250,500

Navy - 2,000,000

Special Forces - 300,000

5 districts

20 members per district in the House of Representatives

10 members per district in the Senate( -4.38 | -4.31 )

Political affiliation - Centrist / Humanist

Religion - Druid

For: Privacy, LGBT Equality, Cryptocurrencies, Free Web, The Middle Class, One-World Government

Against: Nationalism, Creationism, Right to Segregate, Fundamentalism, ISIS, Communism

"If you don't use Linux, you're doing it wrong."

![]() by Vladislavija » Fri Oct 31, 2014 10:27 am

by Vladislavija » Fri Oct 31, 2014 10:27 am

Valica wrote:Vladislavija wrote:

I tend to disagree on all three parts.

And? That simply makes you wrong.

Technology does grow at exponential rates. It's a fact.

We went thousands of years with slow innovation and inventions.

Then came the 19th and 20th century.

After the industrial age, machines were booming.

Fast forward to WW2; we were pumping out inventions like it was nobody's business.

Even further to the Cold War; we went to fucking Luna.

Then you get the 90s and the dawn of serious home computing.

Internet speeds were atrocious and all we had was dial-up, but people loved the internet.

Then you come upon the last 10 years and what do you find?

Fiber internet, 3D printing, virtual reality technology, cloning advancements, the ability to render the Earth in 3D in a browser, etc.

I could keep listing shit, but you get the idea.

Technology advancements allow for more technology advancements. It's literally exponential.

Secondly, imagine going back to 1994 and tell people we would have gigabit internet or that we could play games at 4k resolution...

Or show them a picture of the newest iPhone.

They would have called you insane.

Don't even get me started on quantum computing.

The technology wasn't even starting to emerge 20 years ago.

The third point might be the most flimsy because we understand now that tech evolves rapidly, so we can better gauge what will exist.

But even then, there's no telling what kinds of advancements might be made.

![]() by Lordieth » Fri Oct 31, 2014 10:30 am

by Lordieth » Fri Oct 31, 2014 10:30 am

Sociobiology wrote:Shaggai wrote:We're far enough away from AI that strong AI is unlikely to happen in the next hundred years, even given exponential growth.

with the advent of memristors AI became much more likely, because they use the same type of information as neurons.

Our real limitation is how well we can image the brain, since it shows how to do most of the things we want an AI to do.Yup. Anything which potentially could contain a copy of the AI becomes an existential threat.

not as much as you think, a mind capable of creative thought must by definition be equally capable of error, so don't expect too much from an AI. the more intelligent we make them the more error prone they become.

![]() by Vladislavija » Fri Oct 31, 2014 10:32 am

by Vladislavija » Fri Oct 31, 2014 10:32 am

Lordieth wrote:I'm not sure I agree. Computers are capable of a great level of accuracy, and creativity doesn't necessarily mean error. A lot of what causes human error wouldn't apply to an artificial intelligence. At the very least, its capacity to learn will be beyond any level of a human, so while it may make mistakes, it will almost certainly learn from them.

It could make errors in judgement, yes. Morality is subject to such things.

![]() by Utceforp » Fri Oct 31, 2014 12:47 pm

by Utceforp » Fri Oct 31, 2014 12:47 pm

Vladislavija wrote:Lordieth wrote:I'm not sure I agree. Computers are capable of a great level of accuracy, and creativity doesn't necessarily mean error. A lot of what causes human error wouldn't apply to an artificial intelligence. At the very least, its capacity to learn will be beyond any level of a human, so while it may make mistakes, it will almost certainly learn from them.

It could make errors in judgement, yes. Morality is subject to such things.

I say computers would be as prone to errors as humans. Software bugs can be a bitch to weed out. Especially when using data mining. Just remember that AI which was supposed to diff tanks from humans and failed miserably.

![]() by Vladislavija » Fri Oct 31, 2014 1:53 pm

by Vladislavija » Fri Oct 31, 2014 1:53 pm

Utceforp wrote:Vladislavija wrote:

I say computers would be as prone to errors as humans. Software bugs can be a bitch to weed out. Especially when using data mining. Just remember that AI which was supposed to diff tanks from humans and failed miserably.

The difference being that you can fix bugs in AI, but bugs in humans are hard/impossible to identify or fix.

![]() by The Black Forrest » Fri Oct 31, 2014 1:57 pm

by The Black Forrest » Fri Oct 31, 2014 1:57 pm

![]() by Norstal » Fri Oct 31, 2014 2:02 pm

by Norstal » Fri Oct 31, 2014 2:02 pm

Toronto Sun wrote:Best poster ever. ★★★★★

New York Times wrote:No one can beat him in debates. 5/5.

IGN wrote:Literally the best game I've ever played. 10/10

NSG Public wrote:What a fucking douchebag.

![]() by Mefpan » Fri Oct 31, 2014 2:06 pm

by Mefpan » Fri Oct 31, 2014 2:06 pm

![]() by Shaggai » Sun Nov 02, 2014 2:37 pm

by Shaggai » Sun Nov 02, 2014 2:37 pm

Mefpan wrote:The Black Forrest wrote:

How would it understand compassion?

By programming the AI in a way that includes giving actual values to formless things like trust, loyalty and reliability which could very well be seen as valuable resources given that they're non-negligible factors in causing a preferred reaction in a person?

I mean, we write numbers on fancy slips of paper, call it money and suddenly it's extremely important. Shouldn't be too difficult to make it so that repeated positive interaction with us fleshbags is given some value as well.

![]() by Mefpan » Sun Nov 02, 2014 2:46 pm

by Mefpan » Sun Nov 02, 2014 2:46 pm

Shaggai wrote:Mefpan wrote:By programming the AI in a way that includes giving actual values to formless things like trust, loyalty and reliability which could very well be seen as valuable resources given that they're non-negligible factors in causing a preferred reaction in a person?

I mean, we write numbers on fancy slips of paper, call it money and suddenly it's extremely important. Shouldn't be too difficult to make it so that repeated positive interaction with us fleshbags is given some value as well.

Define trust, loyalty, reliability, compassion, and positive interaction.

Advertisement

Users browsing this forum: Click Ests Vimgalevytopia, Eahland, Grinning Dragon, Keltionialang, Maximum Imperium Rex, Paddy O Fernature, Plan Neonie, Senkaku, Washington Resistance Army

Advertisement